AI-Powered Custom Dashboards

Teams ignored the default dashboard and relied on ad‑hoc report requests or spreadsheet exports. I redesigned it into customizable, role‑based dashboards with AI summary reporting, increasing dashboard engagement by 71% during beta.

Phase 1 · Understand

Problem

Teams flooded us with ad‑hoc report requests or exported everything to spreadsheets. This slowed decision‑making, increased reporting overhead, and made the product look less "enterprise‑ready" in demos.

Objectives

- Personalized dashboard creation

- AI-generated executive reports

Research Insights

Research

- Study: 10 interviews (6 power users, 4 internal stakeholders) plus competitive analysis

- Finding: Users skimmed the default dashboard and went straight to exports; they wanted existing KPIs surfaced as quick‑scan cards organized by role.

- Constraint: Sales needed instant “wow” moments in demos, driving us toward role-based templates.

Design implications

- Implication 1: Prioritize role-based templates over a single generic layout.

- Implication 2: Make customization fast and obvious so users stop exporting to spreadsheets.

- Implication 3: Design one or two “demo‑ready” views that give sales instant visual impact.

Phase 2 · Decide

Design Approach & Decisions

- Scope to core roles: Focused MVP on role-based templates instead of covering every edge case, then pushed back on "everything in v1" requests to ship quality flows

- AI with transparency: Stakeholders wanted AI to answer any KPI request, but testing showed users distrusted automated metrics without visible sources. I scoped AI to generate reports only from dashboard metrics users could verify, enabling safe expansion with real usage data.

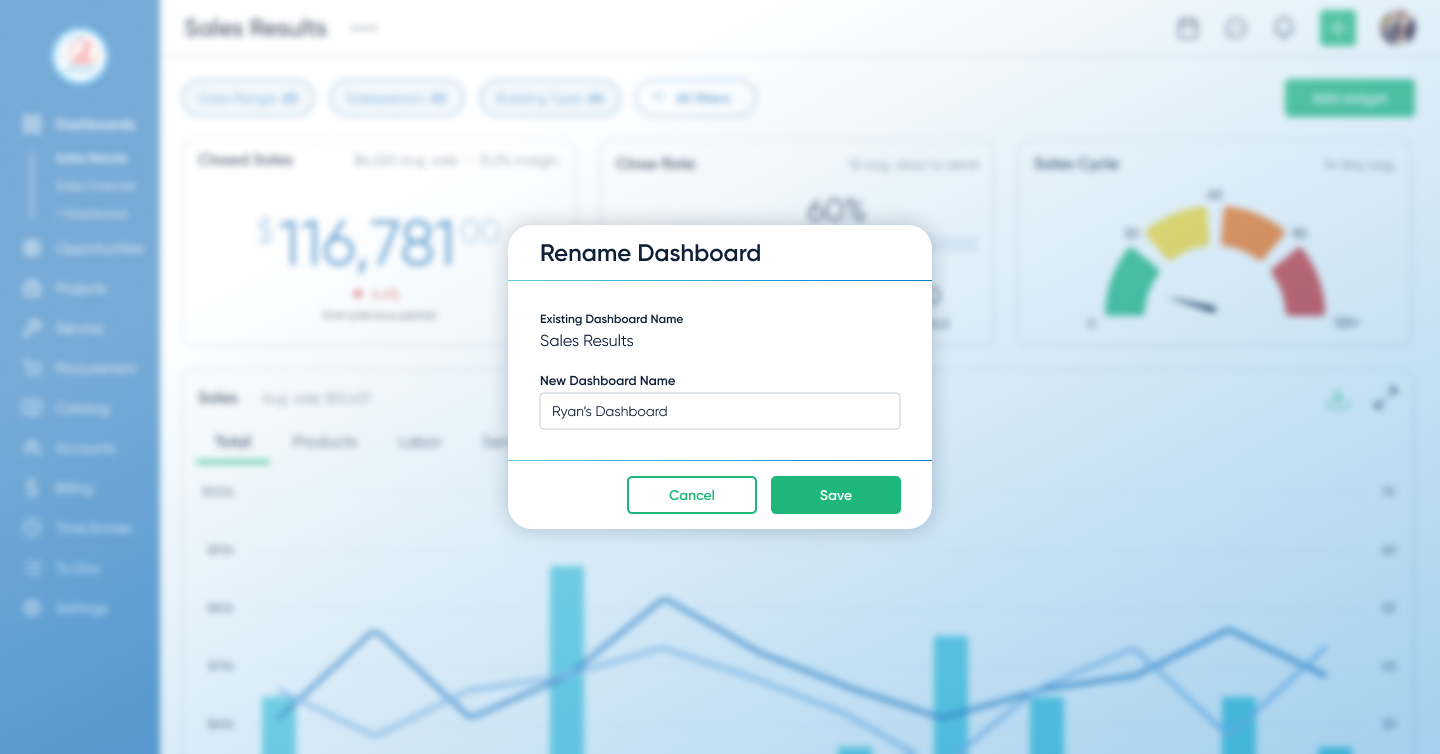

Design Highlights

Phase 3 · Evidence

Prototyping & Validation

I prototyped the end-to-end flow in Figma and tested it with 6 target users — from choosing a template to customizing widgets and generating an AI summary.

Iteration: more KPI cards, more control

Early concepts kept dashboards minimal. Research showed teams wanted a large set of KPI cards for the metrics they already knew we tracked—and they wanted to rearrange and edit them to match how they run their day. I expanded the design to support dense KPI cards with fast drag‑and‑drop editing and rearranging. That increased scope and timeline, but it removed the core adoption blocker: visibility and control.

- Role-based templates: 6/6 participants said the templates matched how they think about their work and were faster than building from scratch

- Customization: All participants successfully created multiple dashboards and edited widgets without guidance

These sessions de‑risked the core flow for beta and helped us defer advanced metrics and broader AI until we had real usage data.

Impact

Even in beta, the redesigned dashboards changed how teams monitored their work: more teams used dashboards, created multiple views, and engaged more deeply with widgets instead of relying on ad‑hoc exports and one‑off report requests.

Phase 4 · Forward Thinking

Next Steps

- Depth for power users: Add advanced metrics and drill‑downs requested by power users before GA, while keeping default dashboards simple and focused.

- AI expansion: Gradually expand AI to suggest cross‑dashboard insights once we can validate accuracy and trust with real usage data.